Today was one of the capstone projects. The task was to create the bot in python that will atomatically, record information for each rental listings into separate google forms. To do this task we are gonna use obviously Python, Selenium, Beautiful Soup Oh, and aslo we will need the time module. Well... from the beginning I didnt know I would use it but i used it to slow down aprocess and see the process much clearer on some steps cause I was having some issues and to catch it I had to use the time module. Again, I am a beginner and this is how I did it: So here we go: We will import all the libraries that we will need:

import time

import requests

from selenium import webdriver

from selenium.webdriver.common.by import By

from bs4 import BeautifulSoup

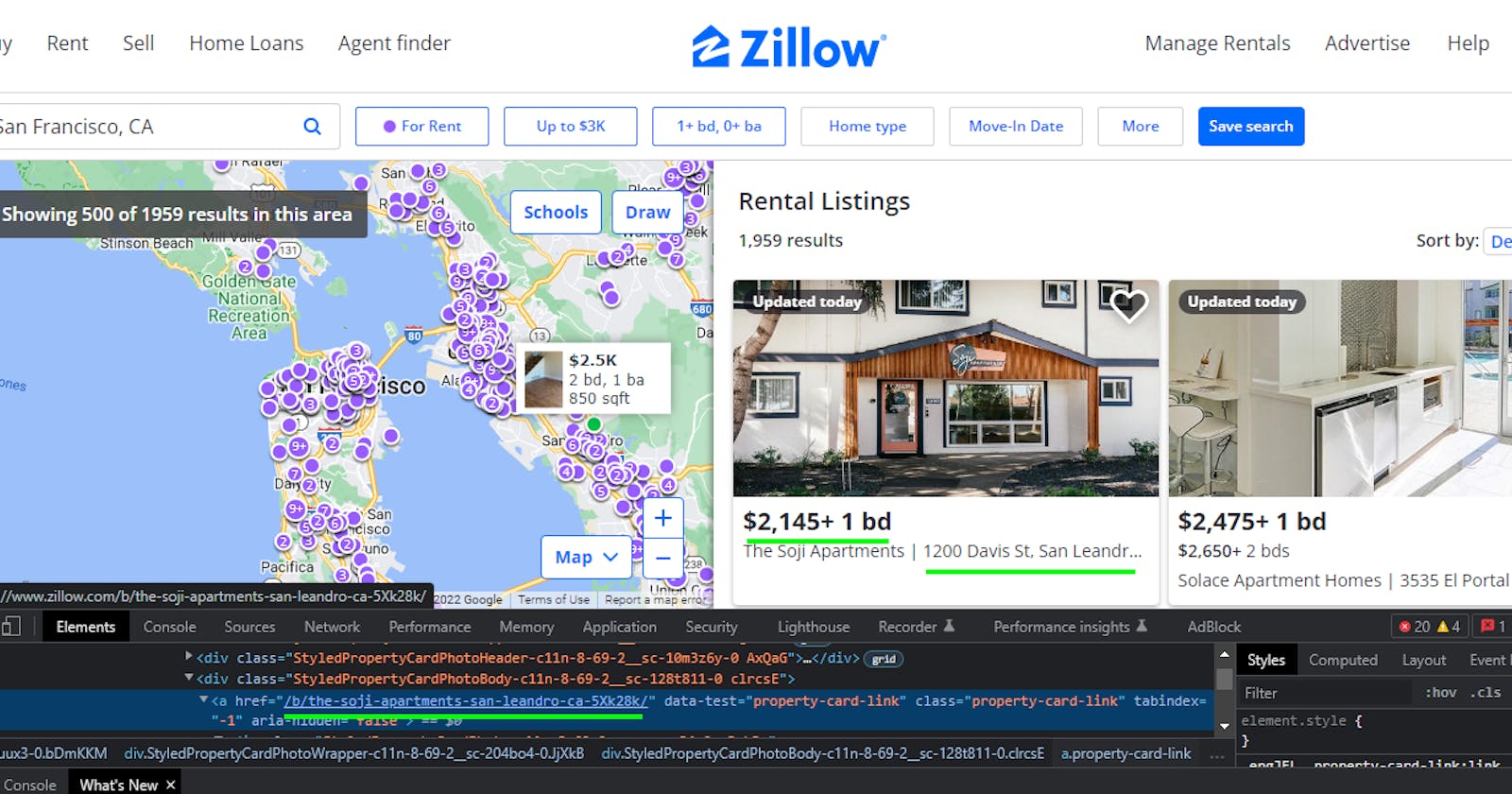

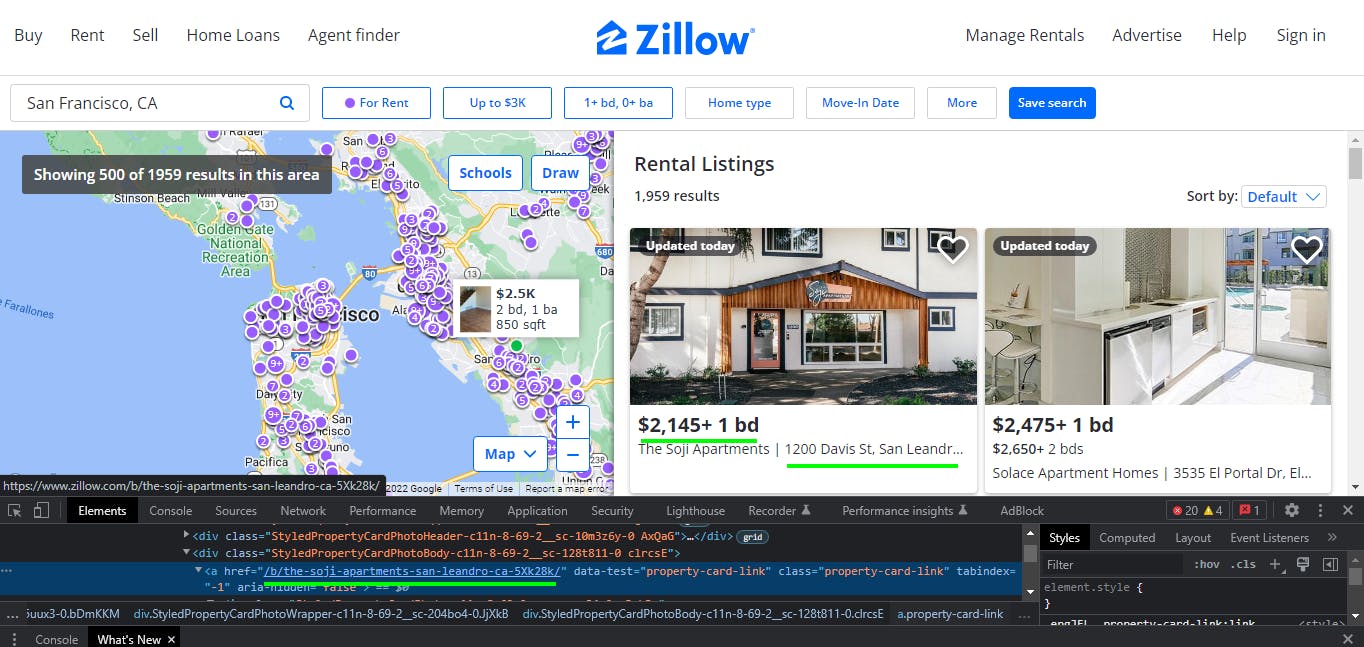

Next I connect to the Zillow Rental Listings. And to do that I will need to identify my user agent and language inside of the headers. So, I use Chrome in English language, so:

user_agent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36"

language = "en,en-US;q=0.9"

endpoint = "https://www.zillow.com/homes/for_rent/1-_beds/?searchQueryState=%7B%22pagination%22%3A%7B%7D%2C%22usersSearchTerm%22%3Anull%2C%22mapBounds%22%3A%7B%22west%22%3A-122.56276167822266%2C%22east%22%3A-122.30389632177734%2C%22south%22%3A37.69261345230467%2C%22north%22%3A37.857877098316834%7D%2C%22isMapVisible%22%3Atrue%2C%22filterState%22%3A%7B%22fr%22%3A%7B%22value%22%3Atrue%7D%2C%22fsba%22%3A%7B%22value%22%3Afalse%7D%2C%22fsbo%22%3A%7B%22value%22%3Afalse%7D%2C%22nc%22%3A%7B%22value%22%3Afalse%7D%2C%22cmsn%22%3A%7B%22value%22%3Afalse%7D%2C%22auc%22%3A%7B%22value%22%3Afalse%7D%2C%22fore%22%3A%7B%22value%22%3Afalse%7D%2C%22pmf%22%3A%7B%22value%22%3Afalse%7D%2C%22pf%22%3A%7B%22value%22%3Afalse%7D%2C%22mp%22%3A%7B%22max%22%3A3000%7D%2C%22price%22%3A%7B%22max%22%3A872627%7D%2C%22beds%22%3A%7B%22min%22%3A1%7D%7D%2C%22isListVisible%22%3Atrue%2C%22mapZoom%22%3A12%7D"

headers = {

"User-Agent": user_agent,

"Accept-Language": language

}

response = requests.get(endpoint, headers=headers)

rental_page = response.text

So, after that we will create the soup object to start scraping the web:

soup = BeautifulSoup(rental_page, "html.parser")

We will need address, price and link for each listing

We will get a hold of each item and in this case each listing is inside of an

We will get a hold of each item and in this case each listing is inside of an article tag, so lets find all of them

articles = soup.find_all("article")

Later, I will have the for loop so while we are at the top section of the document, I will place here the connection to a google form

chrome_driver_path = "C:/Development/chromedriver.exe"

driver = webdriver.Chrome(executable_path=chrome_driver_path)

driver.get("https://forms.gle/R18qdRntB3J9jhbR6")

Also, here I will define my inputs. Later I will define them again cause as I learned after the refresh of the page you need to repoint to same elements.

what_address = driver.find_element(By.XPATH,

'//*[@id="mG61Hd"]/div[2]/div/div[2]/div[1]/div/div/div[2]/div/div[1]/div/div[1]/input')

what_price = driver.find_element(By.XPATH,

'//*[@id="mG61Hd"]/div[2]/div/div[2]/div[2]/div/div/div[2]/div/div[1]/div/div[1]/input')

what_link = driver.find_element(By.XPATH,

'//*[@id="mG61Hd"]/div[2]/div/div[2]/div[3]/div/div/div[2]/div/div[1]/div/div[1]/input')

Now, I will use the for loop to iterate through the list of articles (aka listings) and find link, adress and price

for article in articles:

link = article.find(class_="property-card-link").get("href")

Also, since some of the listings have the partial link I do a small check for each link and if it is not full I add "zillow.com" part so it will become full link

if link[0] == "/":

link = "https://www.zillow.com" + link

time.sleep(1)

price = article.find(name="span").get_text()

address = article.find(name="address").get_text()

Now, coming into our form (we are still inside of the loop), we get a hold of each input and record info for each listing into coresponding input

what_address.send_keys(address)

what_price.send_keys(price)

what_link.send_keys(link)

Next, we locate and click the submit button to submit the form and we do it for each form:

submit_button = driver.find_element(By.CLASS_NAME, "NPEfkd")

submit_button.click()

time.sleep(1)

Lastly, we locate the refresh button, click on it and finally, refresh the page and locate all the elements again:

refresh_form = driver.find_element(By.CLASS_NAME, "c2gzEf")

refresh_form.click()

time.sleep(1)

driver.get("https://forms.gle/R18qdRntB3J9jhbR6")

what_address = driver.find_element(By.XPATH,

'//*[@id="mG61Hd"]/div[2]/div/div[2]/div[1]/div/div/div[2]/div/div[1]/div/div[1]/input')

what_price = driver.find_element(By.XPATH,

'//*[@id="mG61Hd"]/div[2]/div/div[2]/div[2]/div/div/div[2]/div/div[1]/div/div[1]/input')

what_link = driver.find_element(By.XPATH,

'//*[@id="mG61Hd"]/div[2]/div/div[2]/div[3]/div/div/div[2]/div/div[1]/div/div[1]/input')

This is how I did it. Now it is timee to sleep zzzzzzzzzzzzzzzzzzzzzzzzzzz.......................